-

Duane Nykamp

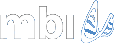

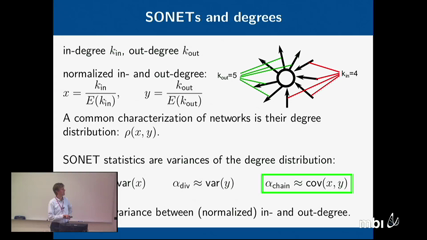

We introduce a random network model in which one can prescribe the frequency of second order edge motifs. We derive effective equations for the activity of spiking neuron models coupled via such networks. A key consequence of the motif-induced edge correlations is that one cannot derive closed equations for average activity of the nodes (the average firing rate neurons) but instead must develop the equations in terms of the average activity of the edges (the synaptic drives). As a result, the network topology increases the dimension of the effective dynamics and allows for a larger repertoire of behavior. We demonstrate this behavior through simulations of spiking neuronal networks.

-

Marty Golubitsky

Wilson's Rivalry Networks and Derived Patterns

-

Lai-Sang Young

I will report on recent work which proposes that the network dynamics of the mammalian visual cortex are neither homogeneous nor synchronous but highly structured and strongly shaped by temporally localized barrages of excitatory and inhibitory firing we call `multiple-firing events' (MFEs). Our proposal is based on careful study of a network of spiking neurons built to reflect the coarse physiology of a small patch of layer 2/3 of V1. When appropriately benchmarked this network is capable of reproducing the qualitative features of a range of phenomena observed in the real visual cortex, including orientation tuning, spontaneous background patterns, surround suppression and gamma-band oscillations. Detailed investigation into the relevant regimes reveals causal relationships among dynamical events driven by a strong competition between the excitatory and inhibitory populations. Testable predictions are proposed; challenges for mathematical neuroscience will also be discussed. This is joint work with Aaditya Rangan.

-

Paul Bressloff

Neural fields model the large-scale dynamics of spatially structured cortical networks in terms of continuum integro-differential equations, whose associated integral kernels represent the spatial distribution of neuronal synaptic connections. The advantage of a continuum rather than a discrete representation of spatially structured networks is that various techniques from the analysis of PDEs can be adapted to study the nonlinear dynamics of cortical patterns, oscillations and waves. In this talk we consider a neural field model of binocular rivalry waves in primary visual cortex (V1), which are thought to be the neural correlate of the wave-like propagation of perceptual dominance during binocular rivalry. Binocular rivalry is the phenomenon where perception switches back and forth between different images presented to the two eyes. The resulting fluctuations in perceptual dominance and suppression provide a basis for non-invasive studies of the human visual system and the identification of possible neural mechanisms underlying conscious visual awareness. We derive an analytical expression for the speed of a binocular rivalry wave as a function of various neurophysiological parameters, and show how properties of the wave are consistent with the wave-like propagation of perceptual dominance observed in recent psychophysical experiments. In addition to providing an analytical framework for studying binocular rivalry waves, we show how neural field methods provide insights into the mechanisms underlying the generation of the waves. In particular, we highlight the important role of slow adaptation in providing a "symmetry breaking mechanism" that allows waves to propagate. We end by discussing recent extensions of the work that incorporate the effects of noise, and the detailed functional architecture of V1.

-

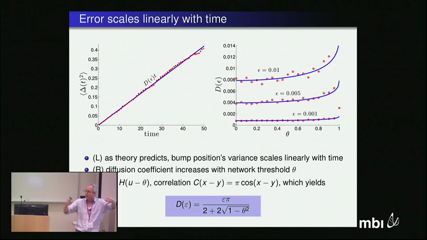

Bard Ermentrout

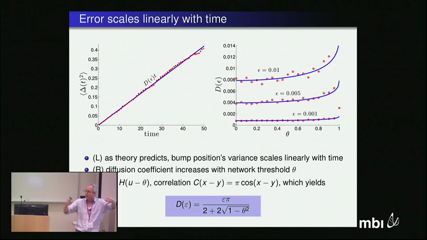

We study the effects of noise on stationary pulse solutions (bumps) in spatially extended neural fields. The dynamics of a neural field is described by an integrodifferential equation whose integral term characterizes synaptic interactions between neurons in different spatial locations of the network. Translationally symmetric neural fields support a continuum of stationary bump solutions, which may be centered at any spatial location. Random fluctuations are introduced by modeling the system as a spatially extended Langevin equation whose noise term we take to be additive. For nonzero noise, bumps are shown to wander about the domain in a purely diffusive way. We can approximate the associated diffusion coefficient using a small noise expansion. Upon breaking the (continuous) translation symmetry of the system using spatially heterogeneous inputs or synapses, bumps in the stochastic neural field can become temporarily pinned to a finite number of locations in the network. As a result, the effective diffusion of the bump is reduced, in comparison to the homogeneous case. As the modulation frequency of this heterogeneity increases, the effective diffusion of bumps in the network approaches that of the network with spatially homogeneous weights. We end with some simulations of spiking models which show the same dynamics (This is joint work with Zachary Kilpatrick, UH)

-

Carson Chow

The dynamics of neural networks have traditionally been analyzed for small systems or in the infinite size mean field limit. While both of these approaches have made great strides in understanding these systems, large but finite-sized networks have not been explored as much analytically. Here, I will show how the dynamical behavior of finite-sized systems can be inferred by expanding in the inverse system-size around the mean field solution. The approach can also be used to solve the inverse problem of inferring the effective dynamics of a single neuron embedded in a large network where only incomplete information is available. The formalism I will outline can be generalized to any high dimensional dynamical system.

-

Sophie Deneve

Neural networks can integrate sensory information and generate continuously varying outputs, even though individual neurons communicate only with spikes---all-or-none events. Here we show how this can be done efficiently if spikes communicate "prediction errors" between neurons. We focus on the implementation of linear dynamical systems and derive a spiking network model from a single optimization principle. Our model naturally accounts for two puzzling aspects of cortex. First, it provides a rationale for the tight balance and correlations between excitation and inhibition. Second, it predicts asynchronous and irregular firing as a consequence of predictive population coding, even in the limit of vanishing noise. We show that our spiking networks have error-correcting properties that make them far more accurate and robust than comparable rate models. Our approach suggests spike times do matter when considering how the brain computes, and that the reliability of cortical representations could have been strongly under-estimated.

-

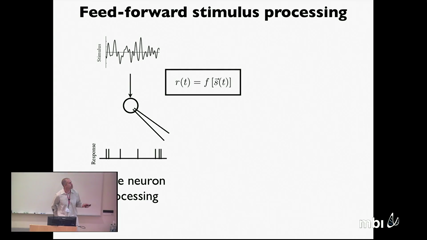

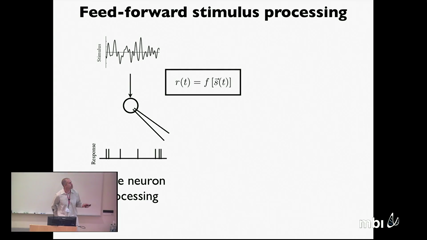

Daniel Butts

Inhibition is a component of nearly every neural system, and increasingly prevalent component in theoretical network models. However, its role in sensory processing is often difficult to directly measure and/or infer. Using a nonlinear modeling framework that can infer the presence and stimulus tuning of inhibition using extracellular and intracellular recordings, I will both describe different forms of inferred inhibition (subtractive and multiplicative), and suggest multiple roles in sensory processing. I will primarily refer to studies in the retina, where it likely contributes to contrast adaptation, the generation of precise timing, and also to diversity of computation among different retinal ganglion cell types. I will also describe roles of shaping sensory processing in other areas, including the auditory areas and the visual cortex. Understanding the role of inhibition in neural processing both can inform a richer view of how single neuron processing can contribute to network behavior, as well as provide tools to validate network models using neural data.

-

Sukbin Lim

Persistent neural activity in the absence of a stimulus has been identified as a neural correlate of working memory, but how such activity is maintained by neocortical circuits remains unknown. Here we show that the inhibitory and excitatory microcircuitry of neocortical memory-storing regions is sufficient to implement a corrective feedback mechanism that enables persistent activity to be maintained stably for prolonged durations. When recurrent excitatory and inhibitory inputs to memory neurons are balanced in strength, but offset in time, drifts in activity trigger a corrective signal that counteracts memory decay. Circuits containing this mechanism temporally integrate their inputs, generate the irregular neural firing observed during persistent activity, and are robust against common perturbations that severely disrupt previous models of short-term memory storage. This work reveals a mechanism for the accumulation and storage of memories in neocortical circuits based upon principles of corrective negative feedback widely used in engineering applications.

Duane NykampWe introduce a random network model in which one can prescribe the frequency of second order edge motifs. We derive effective equations for the activity of spiking neuron models coupled via such networks. A key consequence of the motif-induced edge correlations is that one cannot derive closed equations for average activity of the nodes (the average firing rate neurons) but instead must develop the equations in terms of the average activity of the edges (the synaptic drives). As a result, the network topology increases the dimension of the effective dynamics and allows for a larger repertoire of behavior. We demonstrate this behavior through simulations of spiking neuronal networks.

Duane NykampWe introduce a random network model in which one can prescribe the frequency of second order edge motifs. We derive effective equations for the activity of spiking neuron models coupled via such networks. A key consequence of the motif-induced edge correlations is that one cannot derive closed equations for average activity of the nodes (the average firing rate neurons) but instead must develop the equations in terms of the average activity of the edges (the synaptic drives). As a result, the network topology increases the dimension of the effective dynamics and allows for a larger repertoire of behavior. We demonstrate this behavior through simulations of spiking neuronal networks. Marty GolubitskyWilson's Rivalry Networks and Derived Patterns

Marty GolubitskyWilson's Rivalry Networks and Derived Patterns Lai-Sang YoungI will report on recent work which proposes that the network dynamics of the mammalian visual cortex are neither homogeneous nor synchronous but highly structured and strongly shaped by temporally localized barrages of excitatory and inhibitory firing we call `multiple-firing events' (MFEs). Our proposal is based on careful study of a network of spiking neurons built to reflect the coarse physiology of a small patch of layer 2/3 of V1. When appropriately benchmarked this network is capable of reproducing the qualitative features of a range of phenomena observed in the real visual cortex, including orientation tuning, spontaneous background patterns, surround suppression and gamma-band oscillations. Detailed investigation into the relevant regimes reveals causal relationships among dynamical events driven by a strong competition between the excitatory and inhibitory populations. Testable predictions are proposed; challenges for mathematical neuroscience will also be discussed. This is joint work with Aaditya Rangan.

Lai-Sang YoungI will report on recent work which proposes that the network dynamics of the mammalian visual cortex are neither homogeneous nor synchronous but highly structured and strongly shaped by temporally localized barrages of excitatory and inhibitory firing we call `multiple-firing events' (MFEs). Our proposal is based on careful study of a network of spiking neurons built to reflect the coarse physiology of a small patch of layer 2/3 of V1. When appropriately benchmarked this network is capable of reproducing the qualitative features of a range of phenomena observed in the real visual cortex, including orientation tuning, spontaneous background patterns, surround suppression and gamma-band oscillations. Detailed investigation into the relevant regimes reveals causal relationships among dynamical events driven by a strong competition between the excitatory and inhibitory populations. Testable predictions are proposed; challenges for mathematical neuroscience will also be discussed. This is joint work with Aaditya Rangan. Paul BressloffNeural fields model the large-scale dynamics of spatially structured cortical networks in terms of continuum integro-differential equations, whose associated integral kernels represent the spatial distribution of neuronal synaptic connections. The advantage of a continuum rather than a discrete representation of spatially structured networks is that various techniques from the analysis of PDEs can be adapted to study the nonlinear dynamics of cortical patterns, oscillations and waves. In this talk we consider a neural field model of binocular rivalry waves in primary visual cortex (V1), which are thought to be the neural correlate of the wave-like propagation of perceptual dominance during binocular rivalry. Binocular rivalry is the phenomenon where perception switches back and forth between different images presented to the two eyes. The resulting fluctuations in perceptual dominance and suppression provide a basis for non-invasive studies of the human visual system and the identification of possible neural mechanisms underlying conscious visual awareness. We derive an analytical expression for the speed of a binocular rivalry wave as a function of various neurophysiological parameters, and show how properties of the wave are consistent with the wave-like propagation of perceptual dominance observed in recent psychophysical experiments. In addition to providing an analytical framework for studying binocular rivalry waves, we show how neural field methods provide insights into the mechanisms underlying the generation of the waves. In particular, we highlight the important role of slow adaptation in providing a "symmetry breaking mechanism" that allows waves to propagate. We end by discussing recent extensions of the work that incorporate the effects of noise, and the detailed functional architecture of V1.

Paul BressloffNeural fields model the large-scale dynamics of spatially structured cortical networks in terms of continuum integro-differential equations, whose associated integral kernels represent the spatial distribution of neuronal synaptic connections. The advantage of a continuum rather than a discrete representation of spatially structured networks is that various techniques from the analysis of PDEs can be adapted to study the nonlinear dynamics of cortical patterns, oscillations and waves. In this talk we consider a neural field model of binocular rivalry waves in primary visual cortex (V1), which are thought to be the neural correlate of the wave-like propagation of perceptual dominance during binocular rivalry. Binocular rivalry is the phenomenon where perception switches back and forth between different images presented to the two eyes. The resulting fluctuations in perceptual dominance and suppression provide a basis for non-invasive studies of the human visual system and the identification of possible neural mechanisms underlying conscious visual awareness. We derive an analytical expression for the speed of a binocular rivalry wave as a function of various neurophysiological parameters, and show how properties of the wave are consistent with the wave-like propagation of perceptual dominance observed in recent psychophysical experiments. In addition to providing an analytical framework for studying binocular rivalry waves, we show how neural field methods provide insights into the mechanisms underlying the generation of the waves. In particular, we highlight the important role of slow adaptation in providing a "symmetry breaking mechanism" that allows waves to propagate. We end by discussing recent extensions of the work that incorporate the effects of noise, and the detailed functional architecture of V1. Bard ErmentroutWe study the effects of noise on stationary pulse solutions (bumps) in spatially extended neural fields. The dynamics of a neural field is described by an integrodifferential equation whose integral term characterizes synaptic interactions between neurons in different spatial locations of the network. Translationally symmetric neural fields support a continuum of stationary bump solutions, which may be centered at any spatial location. Random fluctuations are introduced by modeling the system as a spatially extended Langevin equation whose noise term we take to be additive. For nonzero noise, bumps are shown to wander about the domain in a purely diffusive way. We can approximate the associated diffusion coefficient using a small noise expansion. Upon breaking the (continuous) translation symmetry of the system using spatially heterogeneous inputs or synapses, bumps in the stochastic neural field can become temporarily pinned to a finite number of locations in the network. As a result, the effective diffusion of the bump is reduced, in comparison to the homogeneous case. As the modulation frequency of this heterogeneity increases, the effective diffusion of bumps in the network approaches that of the network with spatially homogeneous weights. We end with some simulations of spiking models which show the same dynamics (This is joint work with Zachary Kilpatrick, UH)

Bard ErmentroutWe study the effects of noise on stationary pulse solutions (bumps) in spatially extended neural fields. The dynamics of a neural field is described by an integrodifferential equation whose integral term characterizes synaptic interactions between neurons in different spatial locations of the network. Translationally symmetric neural fields support a continuum of stationary bump solutions, which may be centered at any spatial location. Random fluctuations are introduced by modeling the system as a spatially extended Langevin equation whose noise term we take to be additive. For nonzero noise, bumps are shown to wander about the domain in a purely diffusive way. We can approximate the associated diffusion coefficient using a small noise expansion. Upon breaking the (continuous) translation symmetry of the system using spatially heterogeneous inputs or synapses, bumps in the stochastic neural field can become temporarily pinned to a finite number of locations in the network. As a result, the effective diffusion of the bump is reduced, in comparison to the homogeneous case. As the modulation frequency of this heterogeneity increases, the effective diffusion of bumps in the network approaches that of the network with spatially homogeneous weights. We end with some simulations of spiking models which show the same dynamics (This is joint work with Zachary Kilpatrick, UH) Carson ChowThe dynamics of neural networks have traditionally been analyzed for small systems or in the infinite size mean field limit. While both of these approaches have made great strides in understanding these systems, large but finite-sized networks have not been explored as much analytically. Here, I will show how the dynamical behavior of finite-sized systems can be inferred by expanding in the inverse system-size around the mean field solution. The approach can also be used to solve the inverse problem of inferring the effective dynamics of a single neuron embedded in a large network where only incomplete information is available. The formalism I will outline can be generalized to any high dimensional dynamical system.

Carson ChowThe dynamics of neural networks have traditionally been analyzed for small systems or in the infinite size mean field limit. While both of these approaches have made great strides in understanding these systems, large but finite-sized networks have not been explored as much analytically. Here, I will show how the dynamical behavior of finite-sized systems can be inferred by expanding in the inverse system-size around the mean field solution. The approach can also be used to solve the inverse problem of inferring the effective dynamics of a single neuron embedded in a large network where only incomplete information is available. The formalism I will outline can be generalized to any high dimensional dynamical system. Sophie DeneveNeural networks can integrate sensory information and generate continuously varying outputs, even though individual neurons communicate only with spikes---all-or-none events. Here we show how this can be done efficiently if spikes communicate "prediction errors" between neurons. We focus on the implementation of linear dynamical systems and derive a spiking network model from a single optimization principle. Our model naturally accounts for two puzzling aspects of cortex. First, it provides a rationale for the tight balance and correlations between excitation and inhibition. Second, it predicts asynchronous and irregular firing as a consequence of predictive population coding, even in the limit of vanishing noise. We show that our spiking networks have error-correcting properties that make them far more accurate and robust than comparable rate models. Our approach suggests spike times do matter when considering how the brain computes, and that the reliability of cortical representations could have been strongly under-estimated.

Sophie DeneveNeural networks can integrate sensory information and generate continuously varying outputs, even though individual neurons communicate only with spikes---all-or-none events. Here we show how this can be done efficiently if spikes communicate "prediction errors" between neurons. We focus on the implementation of linear dynamical systems and derive a spiking network model from a single optimization principle. Our model naturally accounts for two puzzling aspects of cortex. First, it provides a rationale for the tight balance and correlations between excitation and inhibition. Second, it predicts asynchronous and irregular firing as a consequence of predictive population coding, even in the limit of vanishing noise. We show that our spiking networks have error-correcting properties that make them far more accurate and robust than comparable rate models. Our approach suggests spike times do matter when considering how the brain computes, and that the reliability of cortical representations could have been strongly under-estimated. Daniel ButtsInhibition is a component of nearly every neural system, and increasingly prevalent component in theoretical network models. However, its role in sensory processing is often difficult to directly measure and/or infer. Using a nonlinear modeling framework that can infer the presence and stimulus tuning of inhibition using extracellular and intracellular recordings, I will both describe different forms of inferred inhibition (subtractive and multiplicative), and suggest multiple roles in sensory processing. I will primarily refer to studies in the retina, where it likely contributes to contrast adaptation, the generation of precise timing, and also to diversity of computation among different retinal ganglion cell types. I will also describe roles of shaping sensory processing in other areas, including the auditory areas and the visual cortex. Understanding the role of inhibition in neural processing both can inform a richer view of how single neuron processing can contribute to network behavior, as well as provide tools to validate network models using neural data.

Daniel ButtsInhibition is a component of nearly every neural system, and increasingly prevalent component in theoretical network models. However, its role in sensory processing is often difficult to directly measure and/or infer. Using a nonlinear modeling framework that can infer the presence and stimulus tuning of inhibition using extracellular and intracellular recordings, I will both describe different forms of inferred inhibition (subtractive and multiplicative), and suggest multiple roles in sensory processing. I will primarily refer to studies in the retina, where it likely contributes to contrast adaptation, the generation of precise timing, and also to diversity of computation among different retinal ganglion cell types. I will also describe roles of shaping sensory processing in other areas, including the auditory areas and the visual cortex. Understanding the role of inhibition in neural processing both can inform a richer view of how single neuron processing can contribute to network behavior, as well as provide tools to validate network models using neural data. Sukbin LimPersistent neural activity in the absence of a stimulus has been identified as a neural correlate of working memory, but how such activity is maintained by neocortical circuits remains unknown. Here we show that the inhibitory and excitatory microcircuitry of neocortical memory-storing regions is sufficient to implement a corrective feedback mechanism that enables persistent activity to be maintained stably for prolonged durations. When recurrent excitatory and inhibitory inputs to memory neurons are balanced in strength, but offset in time, drifts in activity trigger a corrective signal that counteracts memory decay. Circuits containing this mechanism temporally integrate their inputs, generate the irregular neural firing observed during persistent activity, and are robust against common perturbations that severely disrupt previous models of short-term memory storage. This work reveals a mechanism for the accumulation and storage of memories in neocortical circuits based upon principles of corrective negative feedback widely used in engineering applications.

Sukbin LimPersistent neural activity in the absence of a stimulus has been identified as a neural correlate of working memory, but how such activity is maintained by neocortical circuits remains unknown. Here we show that the inhibitory and excitatory microcircuitry of neocortical memory-storing regions is sufficient to implement a corrective feedback mechanism that enables persistent activity to be maintained stably for prolonged durations. When recurrent excitatory and inhibitory inputs to memory neurons are balanced in strength, but offset in time, drifts in activity trigger a corrective signal that counteracts memory decay. Circuits containing this mechanism temporally integrate their inputs, generate the irregular neural firing observed during persistent activity, and are robust against common perturbations that severely disrupt previous models of short-term memory storage. This work reveals a mechanism for the accumulation and storage of memories in neocortical circuits based upon principles of corrective negative feedback widely used in engineering applications.